This year Apple’s iPhone 12 flagship model comes with LIDAR. Many people have actually heard of LIDAR, and Apple’s press conference also mentioned it, but I actually found that many people don’t understand what it’s all about.

Today, I would like to explain what LIDAR is from the beginning and what it is. And what exactly is it for. I want to explain why Apple put such a thing on its flagship model and how it will change our future and the world.

We’re going to talk about what LIDAR is, and the wiki says this about it.

Lidar (/ˈlaɪdɑːr/, also LIDAR, LiDAR, and LADAR) is a method for measuring distances (ranging) by illuminating the target with laser light and measuring the reflection with a sensor. Differences in laser return times and wavelengths can then be used to make digital 3-D representations of the target. It has terrestrial, airborne, and mobile applications.

The term lidar was originally a portmanteau of light and radar. It is now also used as an acronym of “light detection and ranging” and “laser imaging, detection, and ranging”.[4][5] Lidar sometimes is called 3-D laser scanning, a special combination of a 3-D scanning and laser scanning.

Lidar is commonly used to make high-resolution maps, with applications in surveying, geodesy, geomatics, archaeology, geography, geology, geomorphology, seismology, forestry, atmospheric physics,[6] laser guidance, airborne laser swath mapping (ALSM), and laser altimetry. The technology is also used in control and navigation for some autonomous cars.

https://en.wikipedia.org/wiki/Lidar

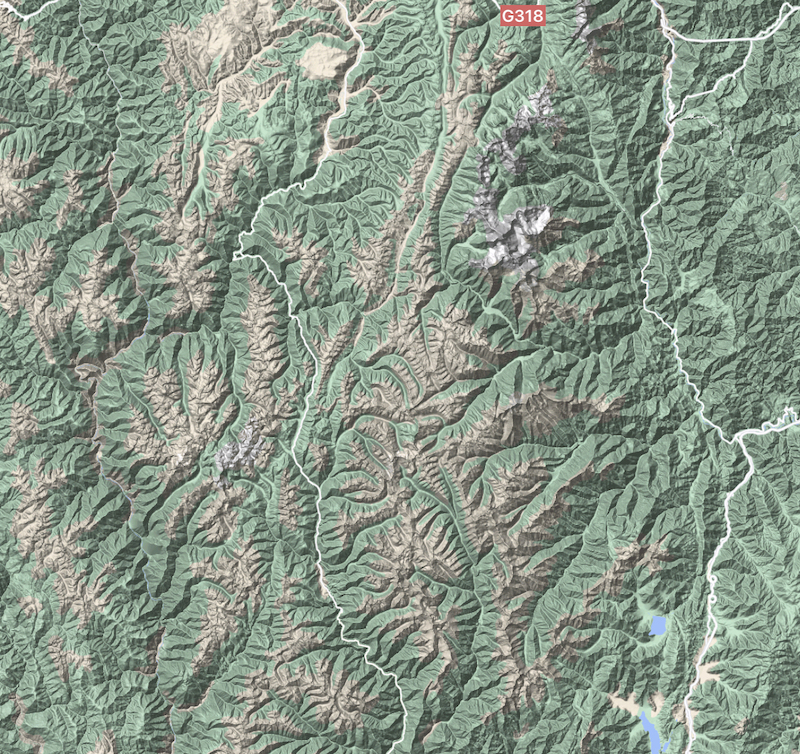

This technology was first used in remote sensing, which is very far away from ordinary people. In fact, many of the topographic maps on Google Maps and other maps, where you can see the ups and downs of the ground, the height of mountains and rivers, are actually mapped out by LIDAR measurements.

This is obtained by scanning down from the sky using satellites or high-powered LIDARs mounted on planes, drones, helicopters, and the like.

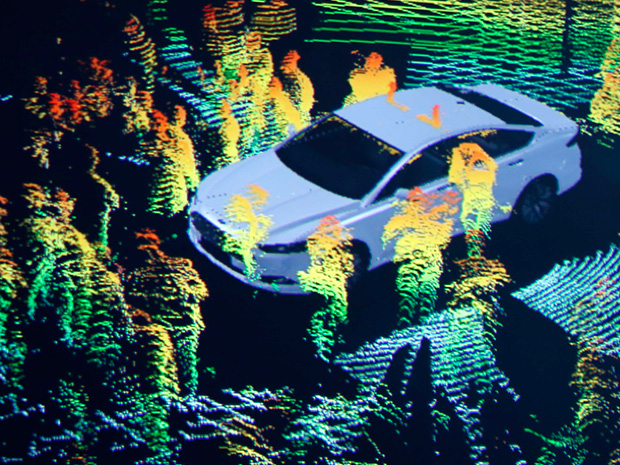

More people hear about LIDAR when it is first used in various types of automated vehicles. By equipping cars with LIDAR, it is possible to obtain information that cannot be obtained visually, get 3D models of the surrounding objects, better assist automated driving, prevent accidents, etc.

So what does LIDAR look like on a cell phone?

This big black dot below the three cameras on the iPhone 12 Pro Max is LIDAR.

How does it work?

Let me show you a demonstration.

The iPad Pro’s LIDAR is the same as the iPhone 12 Pro series LIDAR. The way that LIDAR work is that the sensor emits many beams of infrared laser light (invisible to the human eye. This demonstration was filmed with an infrared camera), which form many pulses on the surface of a flat surface. The time it takes the camera to capture these pulses tells us the distance of the surface from the LIDAR. With this distance information, we can calculate a 3D model of the surface of the object.

In fact, some of you may know that there are many Android phones that have a built-in TOF (Time-of-flight) sensor, which can also do 3D modeling. So what is the difference between TOF and LIDAR?

You can see the comparison here, with the iPad Pro’s LIDAR and Samsung’s TOF sensor below.

Simply put, a TOF sensor emits a diffused beam of light, so it’s probably not a laser, so what you see is a huge dot, whereas, with LIDAR, you see a matrix of dots.

What is the main difference between the two? They both count the time it takes for the light to come back from hitting the object’s surface and thus get the distance between the current part of the surface and the lens. But the TOF used on Android is less accurate and less expensive. Because it only shots one beam of light, and it should not be a laser because it can spread into a large spot. LIDAR has to be a laser, and a laser makes sure that no matter how far away it is, it’s straight and doesn’t spread. And LIDAR requires multiple beams of light, I haven’t followed the technical details, but such a small component shouldn’t rely on rotating moving parts to generate multiple photoelectric pulses. It shouldn’t contain any moving parts. But it would cost a bit more anyway.

But Apple uses a more expensive LIDAR, which also has more accuracy and stability.

That’s one of the basic principles of LIDAR.

Then we see what LIDAR can do.

I used one, Apple’s own ARKit demo of 3D scene reproduction, to show you how it works.

The demo is called Visualizing and Interacting with a Reconstructed Scene, and you can download the code from the link.

This scene is my house. You can see very clearly that all the furniture in my house is covered with a small grid. These grids are actually the iPhone’s 3D real-time models of my home using LIDAR and images.

If you install the Demo App on your phone, you can try to cover up the LIDAR with your finger when you play with the app, and you will find that its real-time modeling ability disappears immediately. The original model wireframe is still there, but the new real-time modeling can’t be done.

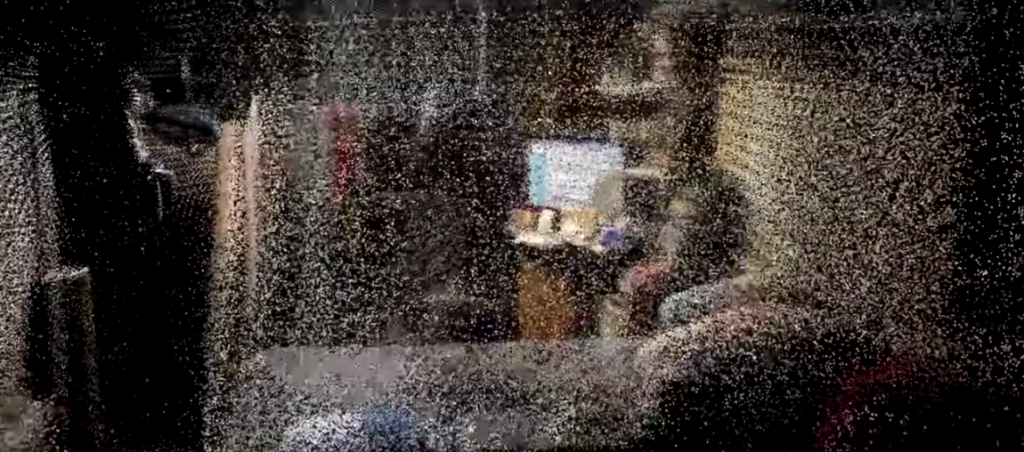

You can also look at an example of a point cloud called Visualizing a Point Cloud Using Scene Depth.

I’m not going to show the video for this example. A point cloud is another way of describing 3D space, and the principle is the same as in the previous demo. However, it is expressed differently. The picture below is a 3D point cloud in my bedroom, as seen through my bedroom curtains.

Simply put, if you want to develop a program where you need to be able to sense and model your surroundings, you need LIDAR.

So what can LIDAR do?

First of all, it can enhance the effect of photography.

In fact, it’s already explained in Apple’s announcement. LIDAR can improve the accuracy of the camera and can reduce the focus time. Especially in night mode, LIDAR can make the focus time 6 times faster.

Apple also demonstrated one, the result of the shot.

We can see that although it’s clearly a night scene, it looks bright and well-focused, details are clear, and the background bokeh is very accurate.

The LIDAR can also greatly improve the effectiveness of the AR game.

But before we demo an AR game, we can demo another example first.

In general, AR games nowadays are all about finding a plane in the real world, such as a table, and then putting the game scene on that plane.

So this demo is called, Tracking and Visualizing Planes.

The picture below is the demo. On the left is my iPhone 12 Pro Max, and on the right is my iPhone X.

It’s clear to see that the iPhone 12 Pro Max has a much better ability to model and perceive its surroundings than the iPhone X.

Let’s move on to an example of a real game, Angry Birds VR, which I think is one of the most fun AR/VR games out there.

First of all, I can put this game scene on my bed. If you look at the example, you will see that the bed may not be recognized as a flat surface if you use an iPhone X. But with the iPhone 12 Pro Max, it will be successfully recognized as a flat surface.

The good thing about this kind of AR game is that it has a very peculiar sense of the scene. Just like you play with blocks, the blocks can be played on the table, on the bed, or the floor, and can interact with the furniture in your house. But not in a normal game, which can only be played against a virtual background. This AR game can create a better and more fantastic sense of place.

LIDAR doesn’t just find more planes, which makes your AR game better laid out, but it also makes the game better understand its surroundings, although more games may come in the future that will give you a completely different experience with and without LIDAR. It’s already a huge improvement just for today’s games.

LIDAR can scan objects and even houses in 3D.

You can scan your house, but you can also scan small things, like a toy. You can scan the house and then run a simulation of the renovation and do some remodel previews. You can also scan an existing toy and 3D print it out, or use 3D design software to remodel it to create a unique toy design of your own and then print it out.

Today I just want to talk about the example of house scanning. There is a famous real estate website in China called “Chain Home”. On the chain’s website, any listing with this icon on it is a real estate listing with VR.

You can try it on the Chain’s website and app. You can click and drag the AR real estate to see every corner of every room. You can also directly see the 3D model of the house.

It’s very convenient, and it’s an almost perfect substitute for a site visit, which can convey much more information than a photo of the house. At one point I even made a game of looking at the interiors of houses in different areas of Shanghai, such as what the interiors of the old Republican-era houses in the former French Concession look like today, what the interiors of some of the newer high-end apartments look like, and so on. What are the interiors of some of the newer high-end apartments, etc.? It even includes some historical buildings, some of which are still inhabited, as well as in demand for rental and sale.

So how does Chain Home do this kind of VR listings? The chain has a set of VR scanning equipment, which is a very specialized set of equipment.

Of course, the chain has developed a series of simplified equipment. However, both this more professional equipment and these more lightweight scanning devices. All of them have a problem, because they are professional equipment, the average person does not know how to use them, and they do not know what to do with them after scanning.

So the chain has another set of processes.

It’s that you have to place an order now online, then schedule an appointment, and then a professional will come and photograph it for you, and then the results will be generated, and then the house you want to sell will have VR information. This process is a bit more complicated and requires a lot of communication between the homeowner or the real estate agent and the chain.

But now with LIDAR, there are some free apps that scan quite well already. For example, I highly recommend an app called 3D Scanners App.

With it, even ordinary people can digitize, VRize, and 3D model their houses. The advantage of this App is that it is free and that it supports exporting in various 3D formats. I also heard that some professional decorators and designers are using this App, which helps their work.

This app scans very well. The way it scans is also straightforward. After you start scanning, you see this purple covered area, which means that the area has been scanned. All you have to do is take every single area in the middle of the house and make it purple.

You can scan from all angles, and the angles that you have scanned will appear in the final model.

There is no need for specialized equipment anymore, a cell phone is all you need, and you don’t need professional people or software to do the rest of the process.

The professional scanning method is to have a professional set up a professional machine, press a button, and it will automatically rotate around and get the data. The professional then looks for another critical point, sets up the designer, and it scans automatically. A house may be set up 6-7 times.

After getting this data, it needs to be brought back to the company for the professionals to process and aggregate it together to form a house data.

The app doesn’t require any expertise, it’s like playing a game, you just end up covering the whole area of the house with purple. All the data processing is done by the app.

This could lead to all sorts of business models, and in the future, maybe any website that sells something could provide 3D models of the product, not just photos. Real estate agencies, short-term rental sites like Airbnb, and the like could provide 3D models of houses. After all, only the provider of the goods, the homeowner can make the 3D model themselves, instead of hiring a professional to scan it.

That’s definitely not all, the rest is really up to the imagination.

LIDAR doesn’t just do what it says. By installing LIDAR on the iPhone 12 Pro series and iPad Pro, Apple has suddenly handed over a very specialized capability to millions of users around the world. If you can afford an iPhone, you can have this capability.

What’s more important is not what’s been talked about, but will there be more ways to play with it in the future? This is something I’ve been thinking about, and it’s one of the main reasons why I must buy the iPhone 12 Pro Max this year.

What I hope is to give you an inspiration, to developers, to ordinary users, to some professionals who have some ideas about how this sensor works, what it can do, and where it is currently used.

But what I’d like to see is more people finding more uses for such a black technology artifact.

For example, we’ve talked before about how the parking lot underneath many particularly large shopping malls is like a maze where you can’t find the exit. With LIDAR, we can get out of the car, turn on the LIDAR, build a model, and then get out of the parking lot. This way we form a path map and a 3D model of the path. And then from the previous exit to the mall, when we come back, we can navigate back based on this path map and 3D model.

Wait, I actually have a particularly exciting idea that I’m thinking about and ready to develop myself, and maybe soon I’ll be able to make a demo of it for you. I’ll probably open source this when it’s done.

Feel free to leave a comment and discuss. Tell us what you think about this technology, thanks.

Some of the demos can’t be shown perfectly in the article because of the article style restrictions, but you can also watch my video on Youtube if you want more information. (Speaking in Chinese with English subtitles)