Lately, Apple announced the M1 chip, releasing the M1, or Arm, versions of the three machines, the Macbook Air, Macbook PRO 13, and Mac mini. Apple called the M1 chip Apple Silicon.

In fact, as developers, we knew about this much earlier. Then we also got our hands on the DTK to test the compatibility of the ARM CPU for Macs and Mac OS and our own Mac software.

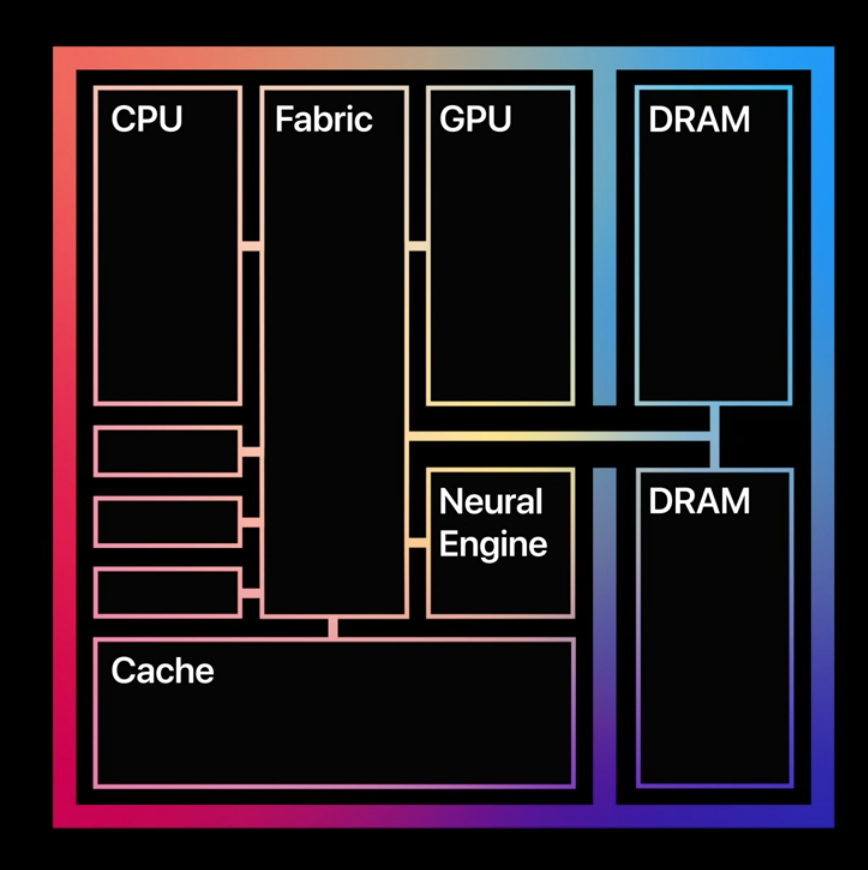

The DTK is actually a tool like the Arm version Mac mini, but that is the A12z chip, which is not exactly the same as the M1. From the release on the 11th, the M1 is better than the A12Z, and it integrates CPU, GPU, and memory, as well as a security chip. However, from an instruction set perspective, it’s the same as DTK.

Why Apple is doing this, I’ll focus on a few things.

- First, from a performance perspective, and to get rid of Intel’s control over Apple or influence on Apple.

- The second is from the energy-saving and endurance perspective.

- The third is the price.

- The Fourth is that iOS apps can now run on Macs.

Getting rid of Intel’s control over or influence over Apple.

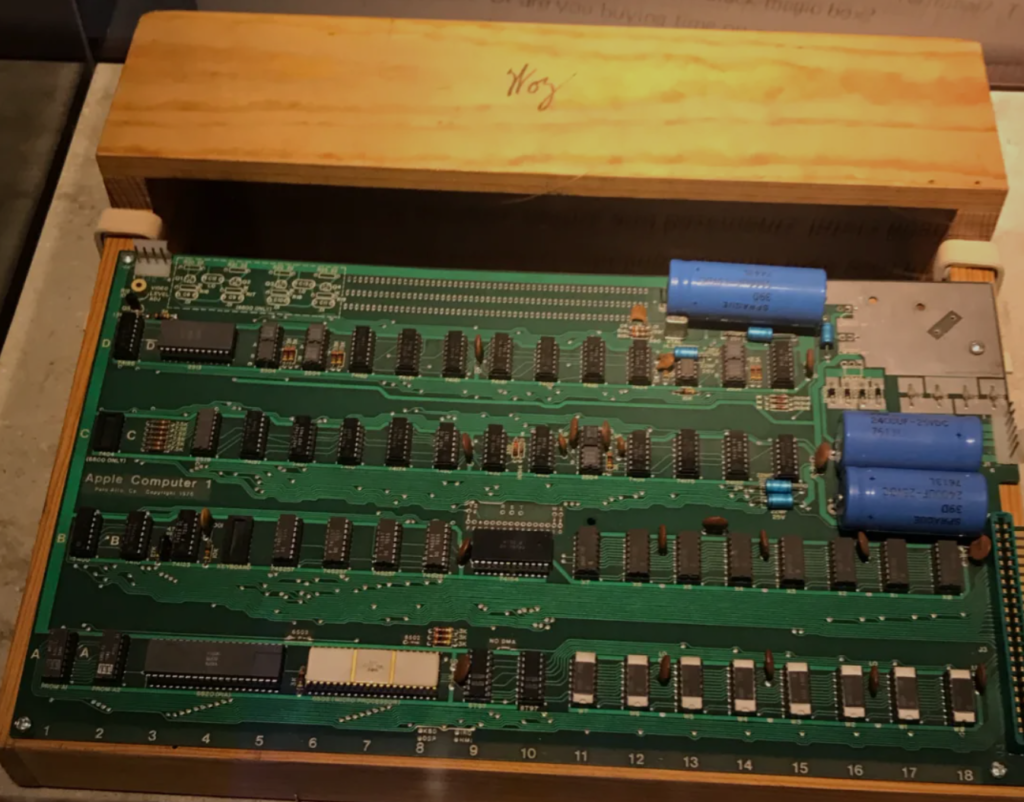

First of all, a little bit of history is that Apple built the Apple I in 1976, but most people have never actually seen it, and all they’ve seen are photos from the Internet.

When I went to the Computer History Museum in Silicon Valley, I took a special look at the Apple I. In fact, all it sells is a circuit board, which has no keyboard, no mouse, and no display.

In those days, there was an organization called the Homebrew Computer Club. Many people may not be able to understand that era, but in fact, there were large computers at that time, that is, big government companies, oil companies, and the like, which had computers as big as a house. But ordinary people couldn’t own computers, they were too expensive to buy. They wanted to have a computer, but it was very expensive, so they used cheap chips, cheap circuitry of this kind to build a computer with poor performance.

The Apple I was one of the hottest ones at that time, and there were many of them, but before the Apple I, there was one called the Altair 8800, which was one of the first popular so-called personal computers, which was a computer that you could afford and you could put together.

The Apple I was actually Apple’s first product, but Apple’s most famous product was actually the Apple II. 6502 chips were used in both the Apple I and the Apple II, and 6502 chips were very interesting because if you played with the early Nintendo FC, that thing used 6502 chips as well.

In 1984, Apple introduced the Mac, the world’s first personal computer with a graphical interface, and the Mac is still a popular product today.

The Mac was not the same as the Apple II, which used Motorola’s 68000 chip. But the Wintel alliance formed by Intel and Microsoft made very cheap PCs that had good performance, but Motorola’s 68000 can’t beat them. Later, Apple, Motorola, and IBM joined together to make something called the PowerPC chip.

In other words, in that era, Apple went through a migration from the 68000 to the PowerPC, and then to about a few years after I remember Steve Jobs came back to Apple, Apple did another PowerPC to Intel migration, this time Apple was going to do an Intel to ARM migration.

This thing sounds like it has nothing to do with ordinary users, and it does have little to do with it. But why talk about it is that Apple has gone through these three migrations. 68000 to PowerPC, from PowerPC to Intel, from Intel to Arm.

Apple basically didn’t abandon users. Apple did all the scenarios of how do I do a smooth migration, especially the last one. I’ve been there.

The first Mac I bought was a PowerPC Macbook, and at this time, Apple came out with two major features, one called Fat binary, a binary executable file containing the code for both CPUs, both PowerPC machine code and Intel machine code.

If you make a Mac program in this format, the program runs on both the PowerPC and the Intel.

But there are some developers who don’t continue to develop this old software anymore. This old software just has the old PowerPC machine code files. Apple makes something called Rosetta, which simply allows old PowerPC instruction programs to run on an Intel chip on a Mac, which emulates a PowerPC environment for you.

This way, there is very little pressure on the user to migrate. First of all, the old program can run, but the developers are familiar with the new platform, compiled Fat binary new program, run faster than before, the users and developers are both happy.

This time, Apple migrated from Intel to ARM, and they did the same thing, so they made a Rosetta 2. What they did was to make it possible to run a virtual Intel environment on an Arm chip so that your program can run fast even without the Arm version. Rosetta 2 is said to have a much better performance than Rosetta 1, which means that many people have found it possible to run non-native Arm, older Mac software, which runs very fast.

Why I want to tell this history is that there are many people who are questioning whether Microsoft did a desktop Armization back in the day and why it failed when the Surface RT basically failed?

Will Apple fail this time as well?

I don’t think so.

When the Surface RT was first released and I learned about its solution, I knew it was going to fail.

Of course, we are not to say that Microsoft is totally dropped behind. Since Windows 2000, Microsoft can actually let the operating system running on a different CPU, but Microsoft’s old operating system, for example, Dos, Windows 95, Window 3.2, that can only be installed on the Intel PC. But with WindNT and Win2000, Microsoft has updated the underlying architecture extensively over time.

Now the Microsoft’s operating system Windows are actually the descendants of the 2000 series, it can actually be installed on a different CPU. So Microsoft did a thing, he made a cut-down version of Windows, called Windows RT, which can be installed on the arm. But they are not like Apple engaged in building a compatibility layer Rosetta, they did not push hard to make a Fat binary similar format. As a result, many people bought Surface RT, because Surface RT and Surface Pro looked very similar, and he thought it’s expensive to buy a Pro, so I’ll buy an RT.

But after he bought the RT, he found that a lot of software could not be run. The Pro could run any software. But the RT could not run anything but software build for RT only. Thus, of course, the Surface RT failed.

Apple’s Armization is very different from Microsoft’s. Apple’s new Arm-based Macs can run any software on the old Macs. So users will, of course, be happy to buy a new machine.

This previous point is that I think Apple can do it successfully because he did not do it once or twice. The previous results were outstanding. We now talk about why he did this thing. There is a reason because many people call Intel called the toothpaste factory. Why do you call it a toothpaste factory? Because we all feel that Intel’s performance is put little by little, each generation of upgrades does not seem to improve performance significantly enough.

There may be a problem that Intel is a crooked businessman. The Chinese like to say that you Intel is unscrupulous, you like to put a little bit of performance, and then make more money from me.

But there’s a big reason why Intel has a huge burden of being compatible with the oldest, oldest CPUs in their family, so it has a huge, huge burden. In fact, you can understand that Intel’s current CPUs are stacked with a bunch of little CPUs, but I’m not saying that they’re really the same. Still, I’m just saying that they have a huge burden on the original CPUs because they’re compatible with so many older products.

That is to say, you get a new Intel CPU, it has a lot of things, its architecture can be innovative, but some of the old ones it can’t get rid of, which results in a lot of redundancy. Hence, the complexity is very high, Arm is equivalent to making a set of things from scratch.

Intel has a big burden, a very big burden. Another thing is that Intel monopoly for too long. Intel has no competitors in the entire computer market, the server market is basically Intel’s server market, the PC market, the Mac market too.

Intel also had a problem with the process. Intel originally imagined the process is not the same as TSMC’s process. TSMC went five-nanometer, Intel had a set of their own program, the results of their own program was not made, but TSMC’s five-nanometer made.

In other words, Apple can now use the five-nanometer solution, but Intel’s CPUs can’t use the five-nanometer solution yet. Intel has now signed a contract with TSMC and is ready to use them to do, but he had done a lot of preparation, some of the initial investment in research and development will be null and void, at least temporarily, can not be used temporarily.

This is the first big problem, Intel is a toothpaste factory, whether it is because of its unscrupulous businessmen, monopoly or technical problems stepped on a big hole in the chip process or not, these reasons, the actual result is that Intel is a toothpaste factory, it gives you a performance boost does not seem, it should have so fast.

The second problem is that Apple is a very punctual company that updates its products once a year, which has always been the case for the iPhone and iPad, and almost always the case for the Mac.

Apple has its own rhythm, it likes to release something at WWDC, and then come back with a press conference to release something. We all know roughly which day of the year he releases a conference, and it’s just a few days before or after.

It has a very big advantage, that is, Apple can gather all the marketing power at this point in time, and then Apple fans also like to go to see at this time, and then anyway, every year there is an update, right, people will think to say I do not want to buy a computer now, because in a month it will be updated, of course, this causes is that Apple’s sales near the time of the update will drop.

But all in all, this is at least not a bad thing for Apple, including the competitors know that they will like to hold a conference after Apple, for example, after Apple held a conference, Apple’s stuff is set to die here, and then the competitors say we have a more powerful chip, right, directly beat Apple.

Because you use Intel, I also use Intel, you use Intel, I’ll release it a month later and get the new chip, so it’s a direct hit, right? Apple has already released it, and there’s no way to change it. That is to say, the product has already been released, even if it is upgraded, it can’t hold another conference.

For example, the iPhone uses a chip from Qualcomm, and then another company waits until Apple’s conference is over, then Qualcomm comes out with a new chip, uses it immediately, and then beats Apple.

Competitors love to play this kind of trick, because that’s always the rhythm of your product launch.

Apple’s rhythm is severely limited by Intel, which does not follow Apple’s cycle because Apple is not Intel’s only customer, so Intel has its own R&D process.

That is to say, Apple users expect you to do a major update every year, for example, Macbook, this year’s version should be faster than last year’s, right? A much faster launch would be nice, but if you’re about to launch a Macbook and Intel doesn’t have a new product, how are you going to improve the performance? Because your CPU does not fall from the sky, you have to buy Intel’s, so if Intel did piggyback, Apple can only bear the consequences, and Apple has borne the consequences many times in history. In fact, including the N card, right, we all think that the N card will be better than the A card, and then the N card performance is now what is very cool.

Intel’s pace is different from Apple’s, which is actually quite annoying for Apple, which means that Apple has to solve this problem, which is very good for Apple. But now that I’m the one who makes the CPU. Can I release the CPU and the computer together every year? I naturally my computer released this year must be how many times better than the previous year’s computer, I can go to the design, because the chip is mine.

Then the core problem is that the process is also a big problem, Intel’s chip is no more room for improvement, is also a problem. Because Arm, you have more control, Intel will give you whatever you want, you can’t let it customize, because Intel is such a big company, Intel can give you customization is a small thing, it can’t give you customization of the whole chip, it must have its own rhythm.

But Arm, in fact, only provides one architecture, so you can do whatever you want with the package, whatever you want with the customization.

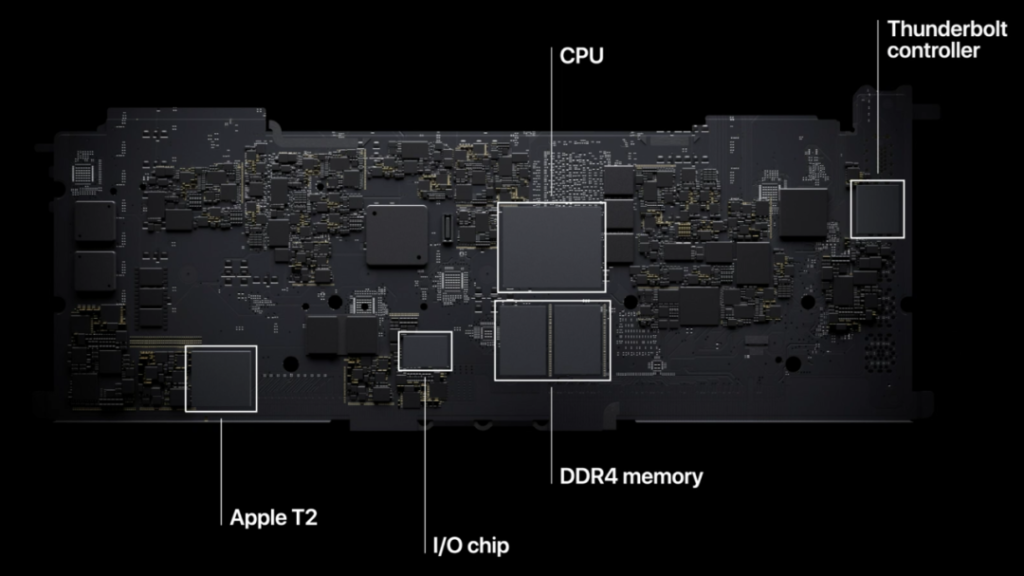

The hardware architecture of Apple computers used to look like this.

The CPU, lightning controller, security chip, I/O chip, and memory are all in separate packages, and everyone is connected via the system bus.

The biggest difference with the M1 is that this time he said he put the memory in the chip.

The advantage of this is that accessing the memory is much faster, because you would have to go through the system bus to access the memory, because the memory is not with the CPU, so you have to go through the bus to access the memory. If the memory and CPU are together, it can access the memory much faster than before, both in terms of addressing and bandwidth. If both CPU and GPU need to access the memory through the system bus, the access speed and bandwidth are actually determined by the bus, but the memory is put directly into the CPU, and the GPU has to access the memory through the bus.

However, if the memory is put directly into the CPU, then it can be as fast as it wants to be, and it can drain the memory as fast as possible. Apple didn’t give a specific number this time, but it could conceivably be a lot faster, and the speedup effect would be very noticeable for particularly memory-intensive tasks, such as processing large images, videos, etc. But Intel can’t give you a custom number for Apple.

But Intel can’t give you a custom CPU with 16GB of RAM and 8GB of RAM, because Intel has to offer a wide variety of customers, and most of them have serious customization needs. It’s very complicated, and it involves a very complicated supply chain.

Cook is a master at playing with the supply chain, to put it bluntly, over the years, since Steve Jobs died, many people say that Apple can’t do this or that, but Apple’s profits have been going up, why? Because Cook is a master of draining the supply chain, integrating memory into a CPU is actually a dangerous thing, meaning that if users have different memory requirements, they have to change CPUs, or build better CPs, different styles of CPUs, etc. But the good news is that Cook is a master of the supply chain, so he should be able to solve this problem.

However, the good news is that Cook is a master of supply chain, he should be able to solve this problem. What is meant by “supply chain master” is that if you produce more than 80,000 units of 8G memory, or 160,000 units of 16G memory, and if you can’t sell them, you’ll be throwing them in your hands.

The average person may not understand the so-called supply chain management, which includes inventory management, inventory management means, it does not mean that this stuff can be sold sooner or later is no problem, in fact, nowadays, large enterprises to a certain extent, the speed of inventory update is a very important indicator. If you sell this product three days after it is manufactured and one month after it is manufactured, the impact on your overall cash flow is tremendous.

If the CPU and memory are separated, it must be the most inventory friendly. For example, if I have 3 CPUs and 6 types of memory specifications, I can put together 3×16, 48 combinations, but if I put CPU and memory together, and I want to provide users with different performance and memory, how many CPUs do I have to make and how many types of memory do I have to make? If you make 48, it becomes very difficult to know which one to make more of and which one to make less of, meaning that once you combine them, the ease of combining them goes down.

If you don’t make accurate forecasts of what’s high and what’s low, which is production, you’re going to have a huge inventory backlog problem, so that’s a problem. That’s why half of the industry doesn’t put CPU and memory together, but it’s not just a technical problem, it’s an economic problem.

This thing is good or bad, in the end we still have to look at the results, because to take the simplest example, in the past, all cell phones can be replaced by the battery, after the iPhone appeared began to popular can not change the battery, whether it is good or bad, I think now we all adapt, all think that can not change the battery can not change the battery, live quite well.

This is the first big problem, that is, Apple must get rid of Intel’s control, or get rid of Intel’s drag, to kick out the stupid teammates.

Energy Savings and Endurance

In fact, Intel also makes Arm chips, but it has not done well. Intel has always controlled the PC and server markets. Intel has always controlled the PC and server market. Especially after the transfer from PowerPC to Intel was completed, Intel has actually taken over the entire PC market and a lot of the server market. But Intel has always wanted to do embedded.

What is the reason why he has not been able to do it well? Intel does not do a good job in reducing energy consumption, you are in a PC, a server inside, tower chassis of that kind of large desktop, you do not matter how much power you consume, you can get a one kilowatt power supply, right? Then you still have enough power to do some fancy light spinning and liquid cooling to cycle through there.

The problem is that laptops and cell phones can’t be charged anywhere, anytime, right? So it’s very demanding in terms of performance and energy efficiency.

The Arm camp has been doing better, in fact, although people say Arm is an embedded chip. However, the first Arm was actually designed for a computer like the BBC Mirco. It was a BBC project to popularize computers, and it was the chip for this computer.

In fact, there is no absolute definition whether it is a PC chip, a server chip, or an embedded chip. It’s just that if the performance of your chip is not enough to make a server, we will say you are a consumer chip, or an embedded chip, or overturned, say Arm, it may be a long time, its performance really can’t compare with Intel, then he can only do consumer, or embedded class. However, although the performance of Intel is very strong, he can’t put it into the cell phone because you consume too much power, so you can’t do the embedded demand.

Genetically, Intel is not as good at energy efficiency as it is at performance. Genetically speaking, arm is good at performance and energy efficiency, and it consumes less energy, so Arm is especially popular in embedded systems.

So it makes sense for Apple to do it this time, because you can actually look at Apple’s notebook market, including its MacMini, and even the iMac.

Apple’s need for thin and light and reduced power consumption is high. Some people seem to question that, saying, “Why do you need to be so thin, right? At the same time you’re making it too thin and the battery won’t work, right?

But Apple has always had a passion for thinness and lightness. What is the reason for its pursuit? I think consumers still care about this thing. A lighter laptop won’t change anything, but it might sell. But laptops that are lighter have to last longer, and that’s pretty impressive.

The data from this release is that the Macbook air can run for up to 18 hours, and that’s in use. For example, the time given is for watching Apple TV videos, while the MacbookPro 13″ can run for 20 hours, which is about double the approximately 10 hours and 8 hours previously marked for the same series of products, which is very powerful.

Of course, 10 hours is not necessarily the same as 10 hours, but the doubling of the usage time is a qualitative change for many users.

Previously, it was difficult to improve the use of Intel, but now with the Arm can be significantly improved.

Especially this conference, Apple has been mentioning a concept, that is, the ratio of power consumption and performance, said he is not simply I am faster than you, because he gave the data is that the Air is twice or three times faster than the previous generation of products, the 13-inch MacbookPro is three times faster than the previous generation of products.

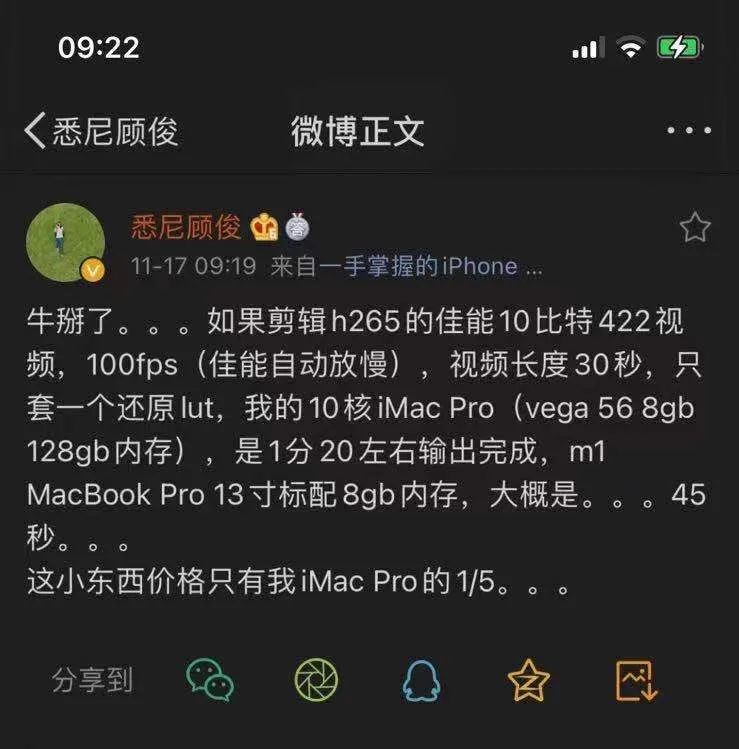

And I read a review in the first two days, and it said that the 13-inch version of the Macbook Pro scored higher than the 16-inch version of the Macbook’s flagship, the previous generation, which was just released a short time ago.

Some people say the scores aren’t necessarily accurate, but you get the general feeling that they are, especially when it comes to products from the same family, and the difference in scores can still be telling.

Also today I read about the experience of a user who found that the Macbook Pro’s 13″ compressed video faster than his higher-end iMac pro.

I do a lot of video myself, which is an absolute necessity, and I was thinking of waiting for the 16″ Arm CPU Mac to come out before I bought it. I was thinking of waiting for the 16″ Arm CPU Mac to come out. Because of my poor eyesight, the big screen was a very important factor in my choice. But after this data came out, I thought I would just buy a 13″ for fun and wait until the 16″ high-performance version comes out before I buy a used one.

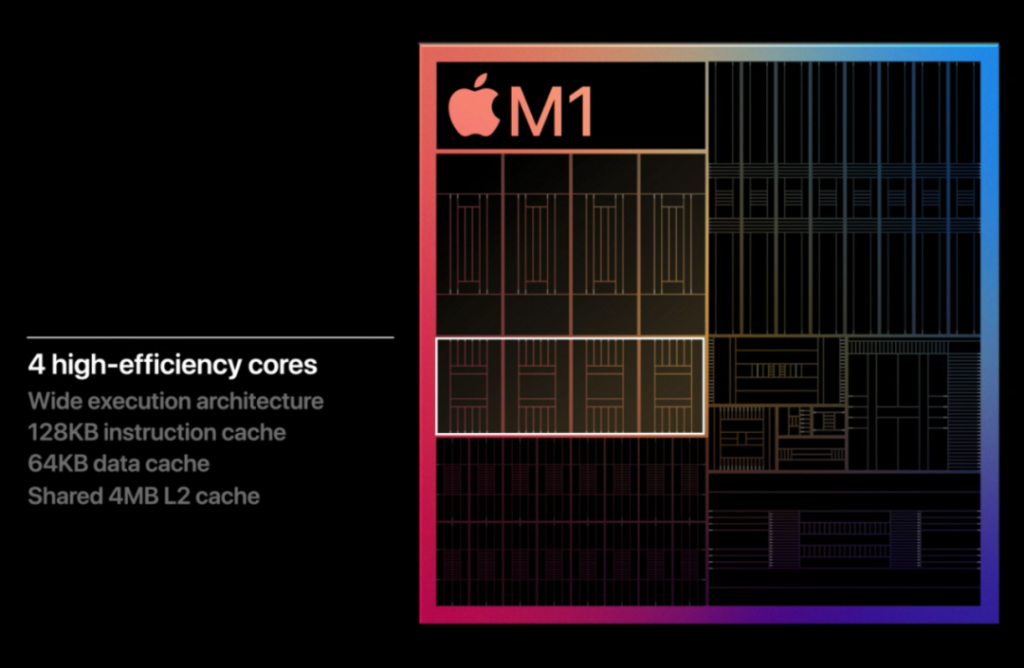

Apple said it has 4 high-performance CPUs this time, so if you are doing some high-performance computing, or if the machine has more threads open, it will use a high-speed CPU to do it, which is naturally power-hungry.

But in fact, everyone with a computer has a long period of time, your machine is actually in a kind of idle state, the CPU will not work at full load. So M1 has four other CPUs that are low performance but are particularly energy-efficient, working at low load, and he uses them in this way to reduce energy consumption.

From the running score and feedback, Apple is doing it right. This energy saving custom, if Intel thought of, did, of course, Apple followed to take advantage. However, Intel did not do well, Apple has no way to force Intel to improve. So, Apple’s own engage in the significance of the chip here.

Jobs has an old saying is that only those who want to do good software will do hardware, this is one of their Apple philosophy, we actually end up giving users a product is a software, operating system called Mac, but this operating system must run on my Apple hardware, in order to play the greatest performance.

Today, on the other hand, people who like to do software will do hardware, because I want to improve the hardware to a certain extreme, so I’m going to do chips, that’s what I mean.

The Price Factor

The price is actually quite understandable, that is, the middle you go through an Intel, Intel is to make money, right? And this time the memory is also embedded in the CPU. Two of the most expensive components in a computer are now self-produced, eliminating the two big suppliers, which of course saves money.

Cook is happy, because he can squeeze the cost even more.

Apple, a very shameless company, won’t drop its prices much even if its costs go down, but it will definitely drop its prices if its costs go down. Of course it wants more people to be able to afford it, so it can make more money.

Apple never does the cost down, price down, gross margin down thing. But what Cook has been doing is to lower the cost, the cost goes down, I make more money, the price goes down a little bit, the users are actually happy. Because the price is down anyway. Although the cost is a big drop, the price is a small drop, but both are down, manufacturers and users, win-win, of course you can also understand that Apple won twice.

iOS apps can run on Macs now

So coming down to what I think is one of the most important things, IOS apps can now run on the Mac.

In fact, you used to be able to do that, you wrote an iOS app, you made a few changes, and you could support Mac. But the API for Mac development is different from iOS, and the iOS API is newer and more convenient, so a lot of people don’t like to write Mac programs.

So Apple made a tool called Catalyst, and made the port a lot easier. But still only a few iOS app authors have taken the initiative to port their iOS apps to the Mac.

But this time, with Arm Cpu for Mac, most iOS apps can run directly on Arm Mac without any modification. Developers don’t have to do anything.

This is a very powerful thing, and why is it powerful?

I’ve got a statistic, a 2020 statistic, from a third party that says there are 1.8 million iOS apps on the App Store right now, and a statistic from another third-party platform that says there are less than 20,000 Mac apps on the Mac App Store.

The Mac has always been the hardest hit by the number of apps, and while there are some Mac apps that don’t make it to the Mac App Store, you probably know that the number of Mac apps is really low, especially compared to iOS.

The other big issue is the number of developers. Cook put out a release in 2018 that said there were 20 million IOS developers in the world. I looked it up and didn’t find the number of Mac developers. I’m guessing it’s probably less than 100,000.

The fact that Apple is borrowing Arm CPU for Mac actually opens up the iPhone-iPad-Mac chain. From now on, if you make an iPhone/iPad App, you will be making an App for all Apple platforms.

Of course, not all apps can run on Mac, there are some APIs that are not available on Mac, such as Healthkit, for example, regarding positioning, Mac positioning is not as accurate as iPhone with GPS. Now Apple’s strategy is to default all apps to run on the Mac, and let developers mark which apps they think they can’t. Then Apple uses the program to scan all apps and let developers mark which apps they think won’t work. Apple then uses a program to scan all the APIs used by apps, and if the API you use is not available on the Mac at the moment, it will actively help you cancel the app’s release on the Mac.

Apple is now doing the screening, and it won’t be 100% of iOS apps that will run directly on the Mac, but I think there’s probably a 60-90 percent of apps that shouldn’t need any modifications or even UI changes to run directly on the Mac.

It’s a terrible thing, and it instantly makes the number of apps the Mac user base can play with huge.

All in all, it’s a solid move by Apple, looks good to me, and I’m looking forward to the rest.